We'll All Vibe Code; It'll Be Anarchy

The past few years I’ve been busy learning the fundamentals of machine learning and building my own custom models. Small models of course. SpaCy models, some small CNN models, a few hand rolled ones, and some neat little ML processes using pre built speech-to-text and OpenCV bits and bobs. Most of the things I have been building are specific, proprietary and sensitive so I haven’t really tried “vibe coding”. I’ve used LLMs to summarize some topics, do topic searches and what have you, but I hadn’t really tried letting an LLM write code “for me”.

I decided to try something small just to see how it would hold up.

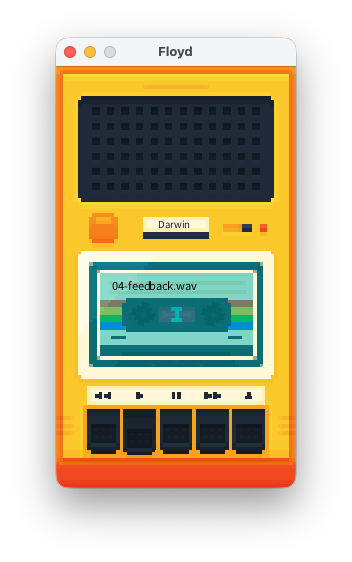

I have this little music application that I wrote to listen to local files. I use it to listen to files on my iPod (yeah, an iPod). I call it Floyd and it looks like this:

I love this little app. The app is written using hand crafted C, and a custom software renderer to draw the UI. Even the MacOS windowing code is in C (believe it or not). When the tape heads rotate, they are rotating pixel by pixel 1. No fancy GPU transformations here. Bliss.

The UI was designed by a beautiful human Thomas Fitzpatrick. Floyd is about as far from vibe coded as one could get.

The main feedback I get from Floyd is that people want the application to be more like how old iTunes used to work. After patching an iPod to use rockbox, people want to be able to play songs off the iPod, edit playlists, and maybe download podcasts to the iPod. I would love to build that kind of thing, but I just don’t have the time currently.

For a hoot, I decided to download OpenAI’s Codex, and to give it a fighting chance, I let it loose on a UI starter kit to see if it could create the basics of an iTunes like UI - something I might be able to just move the Floyd internals into.

While what it produced wasn’t perfect, it was very impressive. The fact it did this with minimal prompting (full session text here for the curious) and that it only took about 20-30 minutes was also impressive:

Looking over the code, it was what I would expect just about any developer to write for a UI spike. It wasn’t very modular, but it was clean, easy to follow, and easily refactorable.

This, of course, is just the shell of a UI, but if I were to have done this myself it would have taken me a day to a few days to get it this far. Codex, Antigravity and the like are seemingly very useful tools. You owe it to yourself to check them out and know how they work.

I am still not in the “do everything via vibe coding” camp for a number of reasons (some of which are better than others):

- I am still unclear who owns this code. Do I own it, does OpenAI own it, or does the training data own it?

- Personally, I love writing code. I do not want to spend my days writing specs or “English as code”. (I do like writing research papers though).

- I have already run into some projects that were vibe coded that were just… well… they had a lot of issues.

Putting my bias aside, LLM’s coding abilities are impressive.

-

The rotation is similar to this, but operating on C memory

↩︎function rotate(cx, cy, x, y, radians) { const o = Math.cos(radians); const i = Math.sin(radians); const rx = (o * (x - cx)) + (i * (y - cy)) + cx; const ry = (o * (y - cy)) - (i * (x - cx)) + cy; return [rx, ry]; }