Emergent Behavior

Introduction

I’ve been mulling this over for a few days. I think emergent behaviour in deep neural networks isn’t surprising when you think about it. I also think trying to refine or control those behaviours might not be possible. Allow me to explain what I mean.

Like a Record Baby

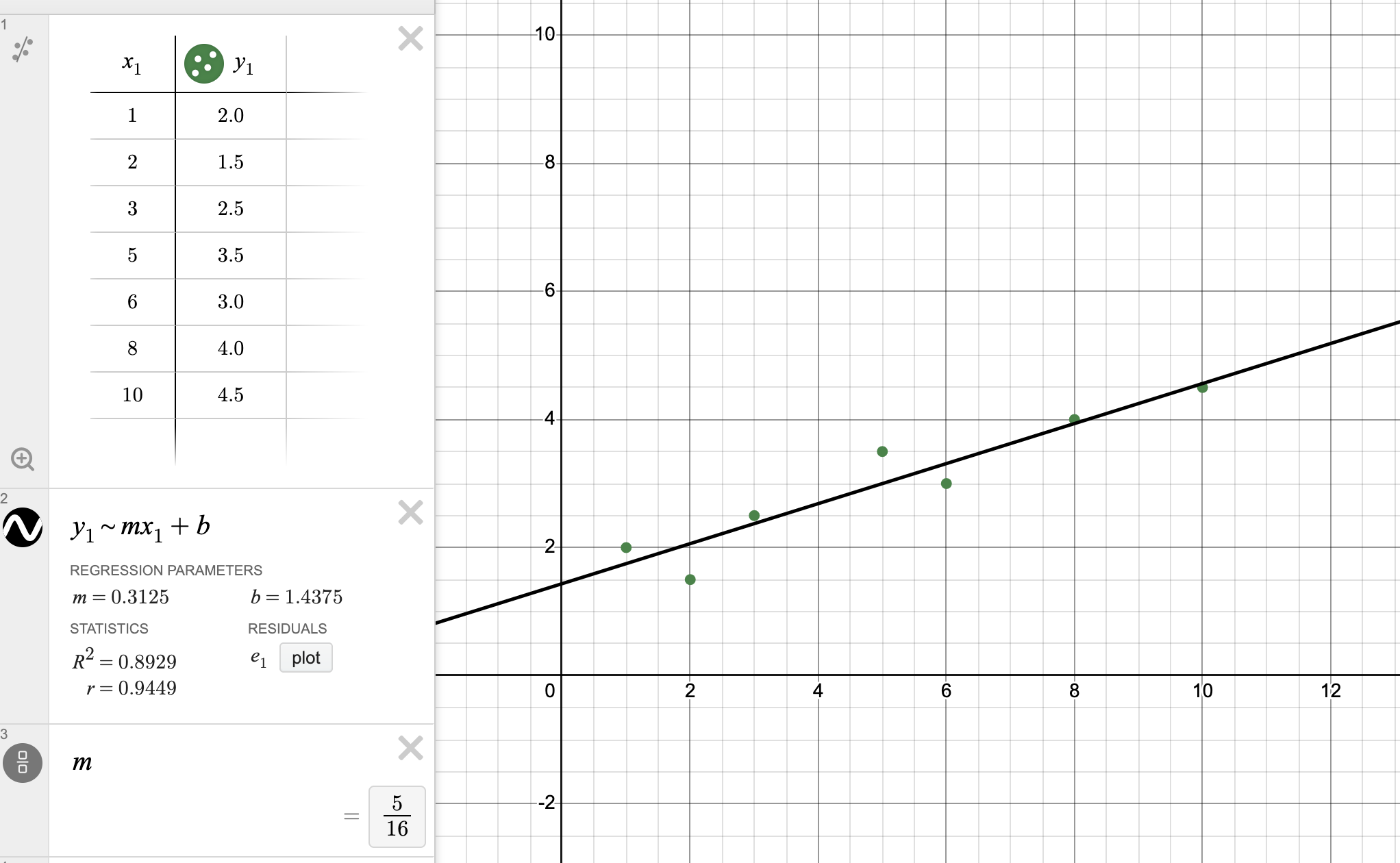

I think most people at this point know that deep neural networks (like LLMs: chatgpt, gemini, claude, etc) are pattern matching machines. With simpler machine learning models, we are trying to find much simpler patterns, the most basic being a pattern of a straight line:

While you may think this is a useless example, a line is actually very useful and applicable in a large number of contexts. It’s an approximation, sure, but that’s what the vast majority of these models are doing.

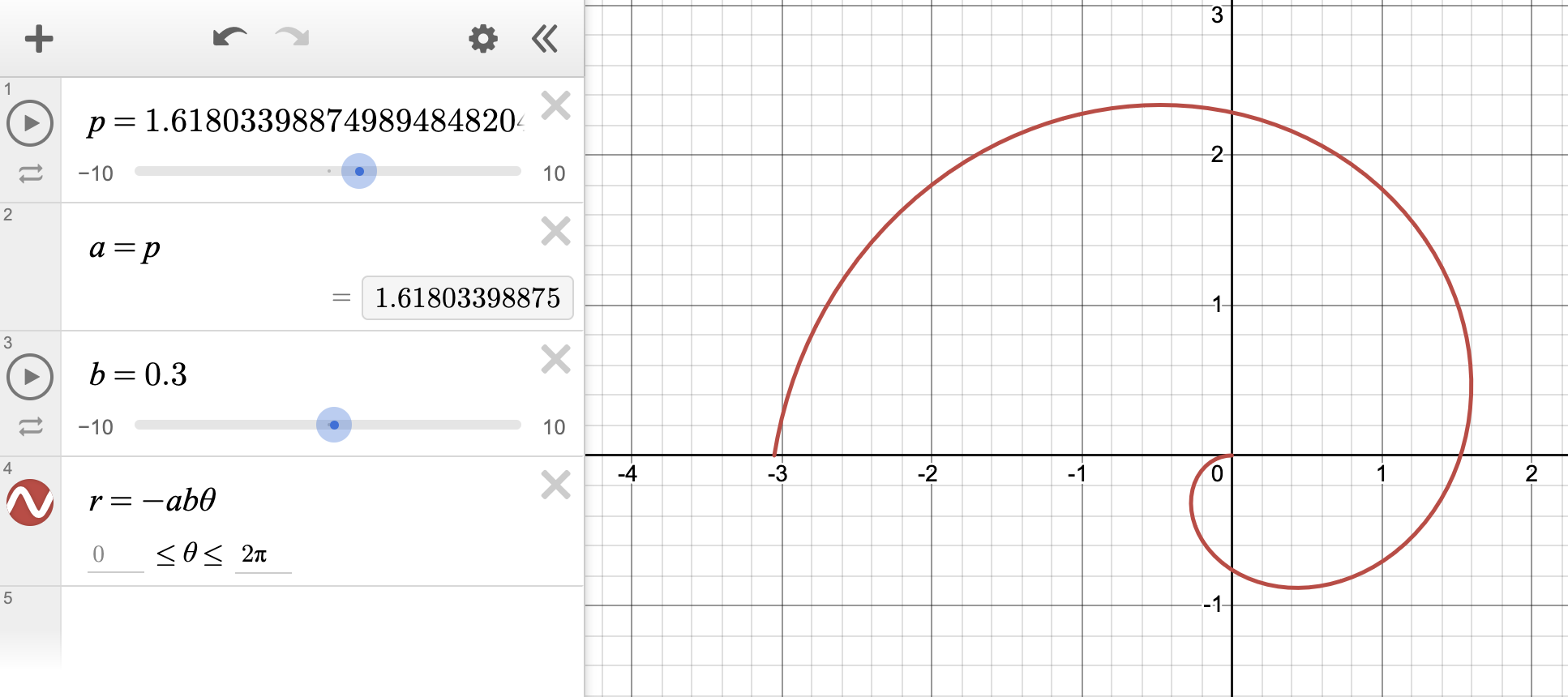

With deep neural networks, the patterns go through many dimensions and, likely, create extremely complex shapes or patterns. These “shape pattern approximations” likely mostly apply in many different context throughout all of reality. To illustrate what I mean, here is something more complex than a line, but not some crazy multidimensional-impossible-to-understand pattern.

Let’s say I built some model to find the spiral in this shell:

The model produced some approximation that wound up being something like this deep in the network:

Obviously not perfect, and it wouldn’t fit the spiral of the shell exactly, but perhaps that was good enough for whatever we were modeling.

If that pattern was somehow reused, how far off do you reckon it would be from this:

or this:

or this:

While it’s really general, and doesn’t fit the contours of the shell perfectly, it also “basically” fits the galaxy but also not the contours of the galaxy.

I think this is what emergent behaviours in LLMs (or just deep neural networks) are doing. It’s kind of surprising that that a snail shell looks like a galaxy.

Keep Picking at it and it Wont Heal

However, this is why I think the “emergent behaviours” of LLMs will never really solidify.

If I spent a billion dollars making my model fit every single contour of the snail shell, it would start to look much less like a galaxy. If I spent a trillion dollars making the model look like a galaxy, it wouldn’t fit the snail shell anymore. The more detail that is added, the less generalized it will become.

This makes me reflect on what “artificial general intelligence” is supposed to be on a fundamental level. Are people looking for a compressed version of everything in the universe? The single pattern that encompasses everything? Is that even a thing?

OK, I’ve procrastinated long enough, and now I am off to do my homework.