On AI Taking Engineering Jobs

Introduction

AI has the potential to eventually replace software engineers, but the current iteration of LLMs (large language models like GPT4, Claude, etc) will not be what does it - at least not with how it’s being currently used. I think engineers have an amazing opportunity to ride the wave of a new paradigm of things to build, but it will likely take some effort.

New Kids on The Block

When I was a kid, there was only assembly, basic, and C.

Then in the spring time of my software building journey, object oriented programming was the big thing (reciting design patters from heart was the leetcode of the day). I remember several of my hero winter engineers at the time - the guys from the Big Iron / Cobol / procedural world - having a very difficult time making the jump to the OO world. Some simply couldn’t adapt, a few took to it with fervor, and some couldn’t be bothered to try. At the time I thought, “I wonder when that’s going to happen to me.”

Then in the summer of my journey, functional programming tried to take hold. It never really got that popular, but aside from not being able to articulate academically why a monad is needed for a side effect, I learned enough to get by.

Now here in autum I feel like there is a new thing taking shape. I am not sure what to call it, but I think of it as something like neural networks as functions. The abstraction of these functions would work in a similar way to normal functions - meaning one could compose them together.

This vagary indeed has to do with “AI” (maybe closer to machine learning). I realise I am not explaining myself well, so let me try to explain a bit more.

AI?

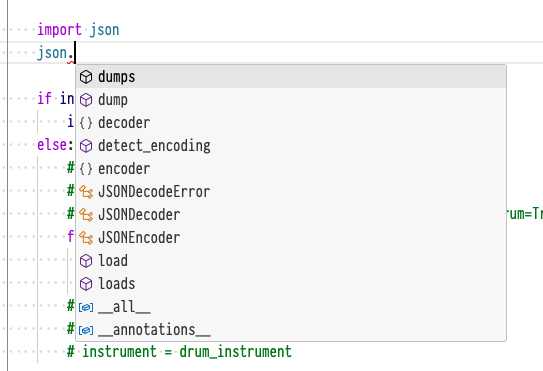

A lot of developers love to create tools to help them write tools. So it is no wonder the current usage of AI is essentially intellisense++. The ideas are following along the same evolution.

I first wrote code in something like edit. Just me, the text, and a physical book from the library by my side.

Then code completion came along (I think Microsoft coined the term “intellisense”)

With intellisense, if you knew the function name you were after (or could take a guess), it was almost always a “.” away. At the time, a lot of people called this cheating and didn’t want you to use it in interviews.

Someone then got clever and started making snippets or templates. Snippets are keywords you can type, hit tab, and then many lines of code are written for you.

These were touted to fix a lot of “boiler plate” code.

And now here we are where the code LLMs spit out is a full function, or files, or groups of files.

But really, this isn’t that interesting. I mean, it’s amazing don’t get me wrong, but really it’s code completion on steroids. It’s a time saver at best, and a technical debt machine at worst.

The main hiccup with what’s going on here is code is for people, it’s not for computers. I think there is something much cooler right under the surface.

A Function by Any Other Name

I’ve been studying machine learning for something like 6 years now. I’ve been having so much fun with it that I’ve gone back to university to try to really understand what’s going on. I am having a great time thank you for asking.

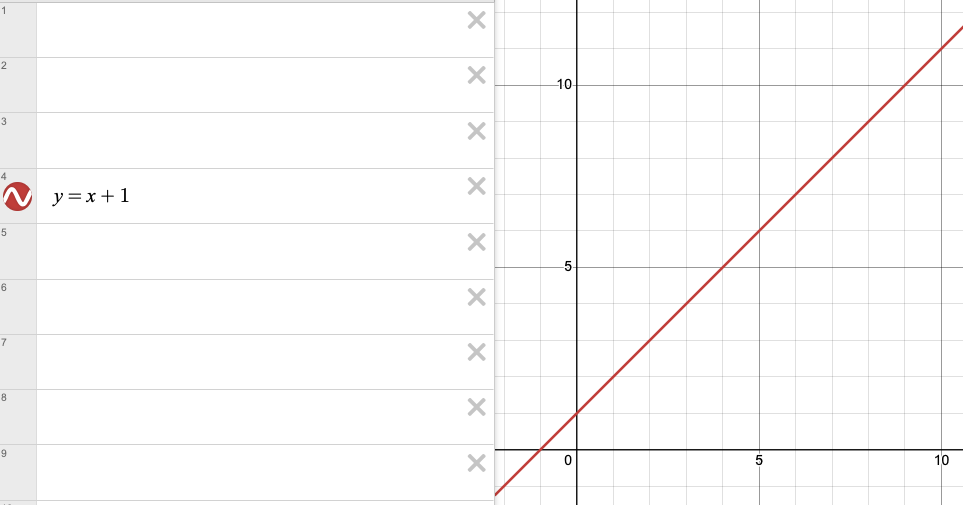

A very subtle thing that dawned on me the other day was that any function can be represented as a graph. I know this is incredibly basic to most people, but, aside from “big O notation” the concept never really sunk in for me in a meaningful way.

Here is an example of what I mean. This function:

function add(x) {

return x+1

}

Is actually this:

Or to be all mathsy:

$$ Let: x \in \mathbb{R} $$ $$ f: \mathbb{R} \to \mathbb{R} $$ $$ f(x) = x+1 $$

But really think about that. That function just sitting there has the potential to solve every single one of the values on that line. If you think about it, every function in every application has some space of things that it solves (computers can only operate using numbers). It carves up some space in some way.

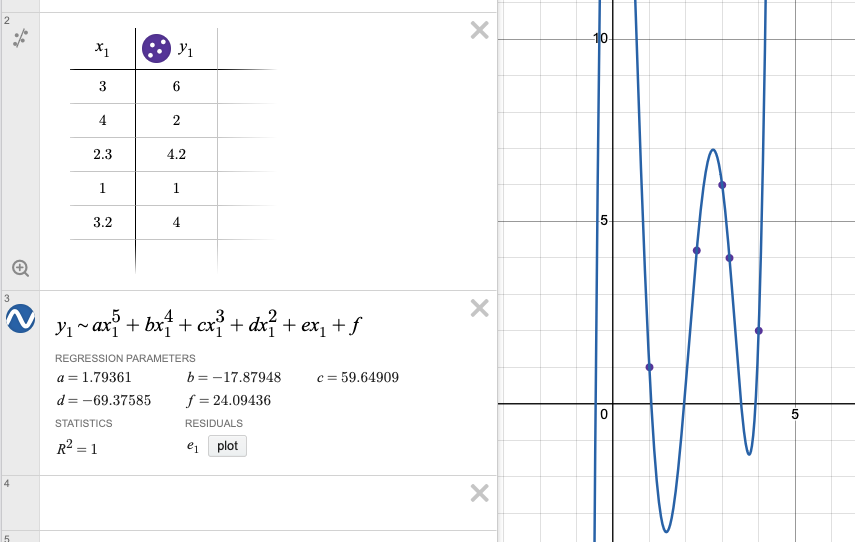

A more complicated example, lets say you had some function that when you input $x_1$ you got out $y_1$ in the following table:

| $x_1$ | $y_1$ |

|---|---|

| 3 | 6 |

| 4 | 2 |

| 2.3 | 4.2 |

| 1 | 1 |

| 3.2 | 4 |

This could be solved by a function like this:

Which would not only solve for those points, but any points on that curve. If you added a few if statements, you could get any points on either side of the lines - it’s partitioning that space.

Essentially (and simplified a whole, whole bunch) this is how the original machine learning classifiers worked. You would collect a bunch of data points, plot them, and find where the partitions between the points were.

So What Dude

The evolution in the past few years has been from these “hand rolled” space partitioning functions, to neural nets that learn how to solve problems themselves. Meaning, they are not specifically programmed like the examples above. If I gave a tiny neural net that table of data, I could train it to solve that problem, without writing any code (aside from the building and training the network).

Now while it would be ridiculously overkill to make that add() function into a neural net, if you go up a few abstractions, what is stopping one from making an entire module / application as a neural net?

The example I am kicking around is, let’s say you had a process that needed to transform XML into JSON. Currently, you’d probably just code it (or ask Chat Jippity to generate the code for you). You could instead, in theory, collect a bunch of XML input and JSON output and train a network to do that task. It would transform the data, and there would be “no code” there. You could even have an LLM generate training data for the function. So to use it would be:

to_json(xml) -> [nerual net] -> json

From a software point of view, it would just be a function. A function backed by a model.

If you put enough of these tiny models together, and then had a neural net on top of that (or some kind of specialised ensemble), you would have a true no-code application. And to “fix a bug”, you would just feed it examples.

This is when software engineers will not be needed and when things will get really crazy. When LLMs (or something like them), create custom neural nets that solve specific problems.

Conclusion

If only a fun scifi story at the moment, I think this concept is interesting. With my limited knowledge it seems like it is not only possible, I think this is where things are heading. That is, if you believe that AI will be building software in the future, it seems a bit silly to think it would do that by writing python. At some point, it’ll probably just spit out op codes that go directly to the processor - but that is another post.

By the way, don’t get too nervous about finding work in the meantime. There are still Cobol programmers. I know people who never quite got the hang of object oriented programming who are still in the industry.

Times are tough right now to be sure, but they are tough for everyone in every industry. AI is probably not your obstacle, if you learn how it works it is more than likely the way… for a while.